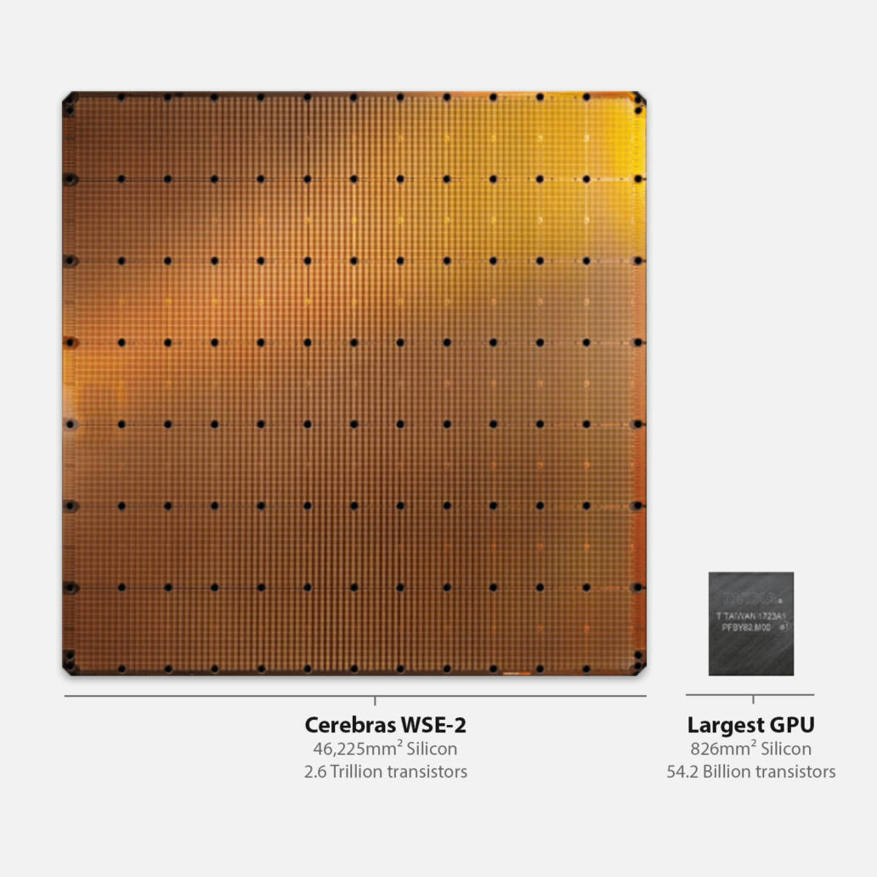

Artificial intelligence takes a lot of compute power, and Microsoft is putting together a road map for powering that computation with small nuclear reactors. That’s according to a job description Microsoft posted Thursday seeking a nuclear technology expert to lead the company’s technical assessment for integrating small modular nuclear reactors and microreactors “to power the datacenters that the Microsoft Cloud and AI reside on,” the job posting reads.

Specifically, Microsoft is looking to hire a “principal program manager for nuclear technology” and that person “will be responsible for maturing and implementing a global Small Modular Reactor (SMR) and microreactor energy strategy,” the job posting reads. Microsoft is looking to generate energy with nuclear fission, which is when an atom splits and releases energy as a result of that splitting.

News of this job description was first reported on DCD, a website about data centers. In January 2023, Microsoft announced a multiyear, multibillion-dollar investment in OpenAI, maker of viral AI chatbot ChatGPT. Bill Gates, Microsoft’s co-founder, is also the chairman of the board of TerraPower, a nuclear innovation company in the process of developing and scaling small modular reactor designs. TerraPower “does not currently have any agreements to sell reactors to Microsoft,” a spokesperson told CNBC. However, Microsoft has publicly committed to pursuing nuclear energy from an innovator in the fusion space.

In May, Microsoft announced it signed a power purchase agreement with Helion, a nuclear fusion startup, to buy electricity from it in 2028. Sam Altman, CEO of OpenAI, is an early and significant investor in Helion. Nuclear fusion occurs when two smaller atomic nuclei smash together to form a heavier atom and release tremendous quantities of energy in the process. It is the way in which the sun makes power. Fusion has not yet been recreated at scale here on earth, but many venture-backed startups are working to make it a reality due to the potential promise of virtually unlimited clean energy.

Interest in nuclear energy has increased alongside concerns about climate change in recent years, as nuclear reactors generate electricity without releasing virtually any carbon dioxide emissions.

The existing fleet of nuclear reactors in the U.S. was largely built between 1970 and 1990, and currently generates about 18% of the total electricity in the U.S., according to the U.S. Energy Information Administration. Nuclear energy also makes up 47% of America’s carbon-free electricity in 2022, according to the U.S. Department of Energy.

Much of the hope for the next generation of nuclear reactor technology in the U.S. is pinned on smaller nuclear reactors, which Microsoft’s job posting indicates the company is interested in using to power its data centers.

Read the full article at: www.cnbc.com