McDonald’s kills AI drive-thru ordering after mistakes

McDonald’s is killing its AI drive-thru order experiment — at least for now — after social media reports

McDonald’s is killing its AI drive-thru order experiment — at least for now — after social media reports

Discover five must-read books on AI and ChatGPT in education, recommended by educators. These books offer practical insights

By enabling abilities like context and natural conversation, generative AI technology could bring us actually useful digital assistants.

A new report reinforces that the virus is not going away. Even as

The first-ever nitrogen-fixing organelle in a eukaryotic cell has been confirmed.

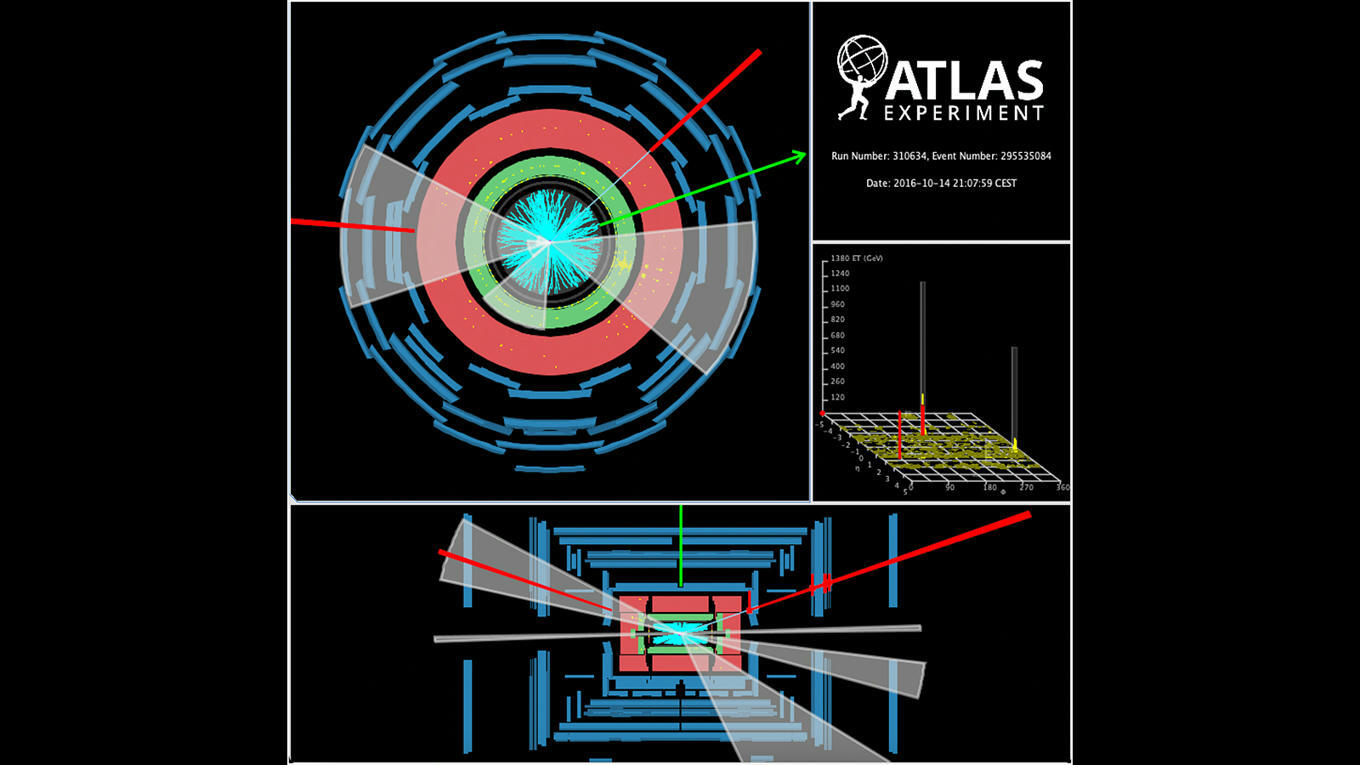

An event that is only

To protect and rear their young, some insects have transformed wild viruses

In the vibrant world of bartending, where every cocktail tells a story and every mix is

By definition, artists and designers are creative people. They work in these jobs because they have talent and

Eleven years of wonder, exploration, observation, study, making, remaking, and countless hours of grinding and polishing. Oftentimes, in

Scientists used a neural network, a type of brain-inspired machine learning algorithm, to sift