This week, Apple unveiled its Vision Pro spatial computer, but Mark Zuckerberg doesn’t seem phased by the introduction of the Vision Pro.

Read the full article at: bgr.com

This week, Apple unveiled its Vision Pro spatial computer, but Mark Zuckerberg doesn’t seem phased by the introduction of the Vision Pro.

Read the full article at: bgr.com

Thank you for reading my latest article Digital Twins, Generative AI, And The Metaverse. Here at LinkedIn and at Forbes I regularly write about management and technology trends.

Read the full article at: www.linkedin.com

After taking a yearslong wait-and-see approach, Apple is now all in on a new “platform.”

Read the full article at: www.wired.com

Original article is here

Large language models are all the rage these days and new ones are popping up every other day. Most of these linguistic behemoths, including OpenAI’s ChatGPT and Google’s Bard, are trained on text data from all over the internet – websites, articles, books, you name it. This means that their output is a mixed bag of genius. But what if instead of the web, LLMs were trained on the dark web? Researchers have done just that with DarkBERT to some surprising results. Let’s take a look.

What is DarkBERT?

A team of South Korean researchers have released a paper detailing how they built an LLM on a large-scale dark web corpus collected by crawling the Tor network. The data included a host of shady sites from various categories including cryptocurrency, pornography, hacking, weaponry, and others. However, due to ethical concerns, the team did not use the data as is. To ensure that the model wasn’t trained on sensitive data so that bad actors aren’t able to extract that information, the researchers polished the pre-training corpus through filtering, before feeding it to DarkBERT.

If you are wondering about the rationale behind the name DarkBERT, the LLM is based on the RoBERTa architecture, which is a transformer-based model developed back in 2019 by researchers at Facebook.

Meta had described RoBERTa as a “robustly optimized method for pre-training natural language processing (NLP) systems” that improves upon BERT, which was released by Google back in 2018. After Google made the LLM open-source, Meta was able to improve its performance.

Cut to the present, the Korean researchers have improved upon the original model even further by feeding it data from the dark web over the course of 15 days, eventually arriving upon DarkBERT. The research paper highlights that a machine with an Intel Xeon Gold 6348 CPU and 4 NVIDIA A100 80GB GPUs was used for the purpose.

Read the full article at: indianexpress.com

Caltech isn’t the only organization that has become interested in solar power stations. The Chinese government is planning a 2028 mission to demonstrate the technology in low Earth orbit. And last November, science ministers in the E.U. greenlit Solaris, a joint project between the European Space Agency (ESA) and aerospace company Airbus to look into the possibility of building gigantic solar power stations in geostationary orbit over Europe. (Whether intentional or not, the linkage to the world of mid-century sci-fi remains, with the project sharing the title of Stanislaw Lem’s classic 1961 novel.)

Learn more / En savoir plus / Mehr erfahren:

https://www.scoop.it/topic/21st-century-innovative-technologies-and-developments/?&tag=Solar+Energy

Read the full article at: time.com

Neuralangelo, a new AI model by NVIDIA Research for 3D reconstruction using neural networks, turns 2D video clips into detailed 3D structures — generating lifelike virtual replicas of buildings, sculptures and other real-world objects.

Like Michelangelo sculpting stunning, life-like visions from blocks of marble, Neuralangelo generates 3D structures with intricate details and textures. Creative professionals can then import these 3D objects into design applications, editing them further for use in art, video game development, robotics and industrial digital twins.

Read the full article at: blogs.nvidia.com

Figure 01 is a bipedal, AI-powered humanoid. And it wants to work in a warehouse.

Key to Figure’s robotics approach is its reliance on artificial intelligence to enable its robots to learn and improve their abilities. As movements and performance evolves, the robots can progress from basic lifting and carrying tasks to more advanced functions. That work will start out in the warehouse setting, doing the kind of heavy lifting that people don’t particularly enjoy.

“Our business plan is to get to revenue as fast as we can. And honestly that means doing things that are technically easier,” says Figure founder Brett Adcock.

Single-purpose robots are already common in warehouses, like the roving robots that carry boxes to shelves in Amazon fulfillment centers, and a robot created for DHL Supply Chain that unloads boxes from trucks at warehouse loading bays. Human-like robots could be a more versatile solution for the warehouse, Adcock says. Instead of relying on one robot to unload a truck and another to haul boxes around, Figure’s plan is to create a robot that can do almost anything a human worker otherwise would.

In some ways, it’s a narrower approach to the humanoid robot, which many other companies have sought to build. Honda’s Asimo was intended to help a range of users, from people with limited mobility to older folks needing full-time care. NASA has invested in humanoid “robonauts” that are capable of assisting with missions in space. Boston Dynamics has pushed the limits of robotics by creating humanoids that can jump and flip.

“Existing humanoids today have just been stunts and demos,” Adcock says. “We want to get away from that and show that they can be really useful.” Figure has produced five prototypes of its humanoid. They’re designed to have 25 degrees of motion, including the ability to bend over fully at the waist and lift a box from the ground up to a high shelf. Hands will add even more flexibility and utility. That is the plan, at least. Right now, the prototype robots are mainly just walking.

Adcock expects to conduct extensive testing and refinement in the coming months, getting the robots to the point where they can handle most general warehouse applications by the end of the year. A pilot of 50 robots working in a real warehouse setting is being eyed for 2024. “Hardware companies take time,” he says. “This will take 20 or 30 years for us to really build out.”

With a reflective featureless face mask that may remind some viewers of a character from G.I. Joe, Figure 01 is a sleeker vision of the humanoid robots people may now be familiar with. Atlas, the walking, jumping, and parkour-enabled robot from the research wing of Boston Dynamics, for example, has prioritized mechanical skills over aesthetics.

Figure’s robot bears some similarity to Tesla’s Optimus robot. Announced in 2021 during a presentation featuring a human in a spandex robot suit, Optimus also aspires to a smoother form but its latest prototype looks more science science than science fiction. Adcock says Figure’s robots use advanced electric motors, enabling smoother movement than the hydraulically run Atlas, which is allowing the prototypes to have a more natural gait while also fitting the mechanical systems into a smaller package. “We want to fool like 90% of people in a walking Turing test,” he says.

The concept is being built around the notion that the robot can be continually improved over time, learning new skills and eventually expanding into more complex tasks. Being able to do more, Adcock says, makes what could be a very expensive device much more affordable to build and buy. That could someday lead to Figure’s robots working in manufacturing, retail, home care, or even outer space. “I really believe that humanoids will colonize planets,” Adcock says.

For now, Figure is targeting the humble warehouse. “If we unveil the humanoid at some big event, it’ll just be doing warehouse work on stage the whole time,” Adcock says. “No back flips, none of that crazy parkour stuff. We just want to do real, practical work.”

Read the full article at: www.fastcompany.com

The Singularity, often referred to as the Technological Singularity, is a hypothetical point in the near future at which artificial intelligence (AI) becomes so incredibly advanced that it surpasses human intelligence, leading to rapid, unforeseeable changes in society and technology, life as we know it. The term was popularized by mathematician and computer scientist Vernor Vinge and futurist Ray Kurzweil.

At the Singularity, AI systems would possess the ability to autonomously learn, self-improve, and create new AI systems with very advanced capabilities and more intelligent than themselves, resulting in an exponential increase in intelligence. This self-improvement cycle could lead to AI systems that are vastly more capable and sophisticated than human minds and anything humans can even imagine.

The Singularity is often associated with the development of artificial general intelligence (AGI), which is an AI system that can perform any intellectual task that a human being can do. AGI is distinct from narrow AI, which is designed for specific tasks and lacks the broad cognitive abilities and adaptability of human intelligence.

The implications of the Singularity are the subject of much speculation and debate today. Most people who have thought about it agree that the Singularity would be a decisive turning point in human history. Some view this with awe and trepidation, almost like a religious event that will lead to everlasting life and happiness. Many believe it will bring about tremendous breakthroughs in medicine, energy, and space exploration. Others are more cautious, expressing concerns about the ethical, societal, and existential risks associated with super-intelligent AI systems. Some predict doom and gloom. Others think it is all but impossible.

Predicting the Singularity Start Date

The general caveat to this question given by all experts is that predicting when the Singularity might occur is highly uncertain, as it depends on the pace of AI research, breakthroughs in understanding human cognition, and the development of advanced hardware and software systems. Still, ChatGPT-4 has predicted a 5%-10% chance that the singularity will happen within five years, 2028. Chat GPT-4 did so without knowledge of what has happened since September 2021, when it was last fed data.

We know what has happened since September 2021, we know the pace of change, even though the ChatGPT model does not. We know that when ChatGPT-3.5 was released in November 2022, it scored in the bottom 10% of the multi-state Bar exam. But just five months later, when 4.0 was released in March 2023, it scored in the top 10%. Let that sink in and remember no new data was provided. There was only improvement in reasoning ability. As lawyers who have all taken this test, we know better than anyone the significance of a jump from the bottom to the top 10% of this challenging test. Yes, it is getting pretty smart, fast. How much longer until its intelligence exceeds our own?

Opportunities and Challenges Presented by the Singularity

The three opportunities listed here, all good, are generally thought to be possible in a time of technological Singularity, a time where AI intelligence and capacities accelerate at an exponential rate.

- Accelerated Technological Progress: The Singularity could lead to rapid advancements in various fields, as super-intelligent AI systems develop new technologies and solve complex problems that were previously beyond human reach.

- Economic Growth: With Ai systems handling tasks more efficiently and effectively than humans, productivity and economic growth could increase dramatically, potentially leading to higher standards of living and reduced global inequality.

- Scientific Discoveries: The Singularity might enable breakthroughs in areas such as healthcare, environmental sustainability, and space exploration, as Ai systems analyze vast amounts of data, simulate complex phenomena, and generate innovative solutions to pressing challenges.

Conversely, as usual, their is a flip side to opportunities, the generally accepted challenges, some might say threats, presented by the Singularity:

- Ethical Concerns: As Ai systems surpass human intelligence, ethical considerations become paramount. Ensuring that Ai systems behave ethically, adhere to human values, and respect individual rights will be crucial.

- Job Displacement: The widespread adoption of super-intelligent Ai systems could result in job displacement across various industries, as human workers are replaced by more efficient Ai. This raises concerns about unemployment, workforce retraining, and social safety nets.

- Existential Risks: The Singularity poses potential existential risks, as super-intelligent Ai might act in ways that are harmful to humanity, either intentionally or unintentionally. Ensuring Ai safety and robustness is essential to mitigate these risks.

Read the full article at: e-discoveryteam.com

AI reports by Dr Thompson

Cited: NBER AI report for US Government

The ChatGPT Prompt Book

Roadmap: AI’s next big steps…

Google Pathways: An Exploration…

Use cases for large language models…

What’s in my AI? Analysis of Datasets…

Bonum

The rising tide lifting all boats

Irrelevance of intelligence

Annual AI retrospectives

The sky is infinite (2022 AI retrospective)

The sky is bigger… (mid-2022 AI)

The sky is on fire (2021 AI retrospective)

AI models

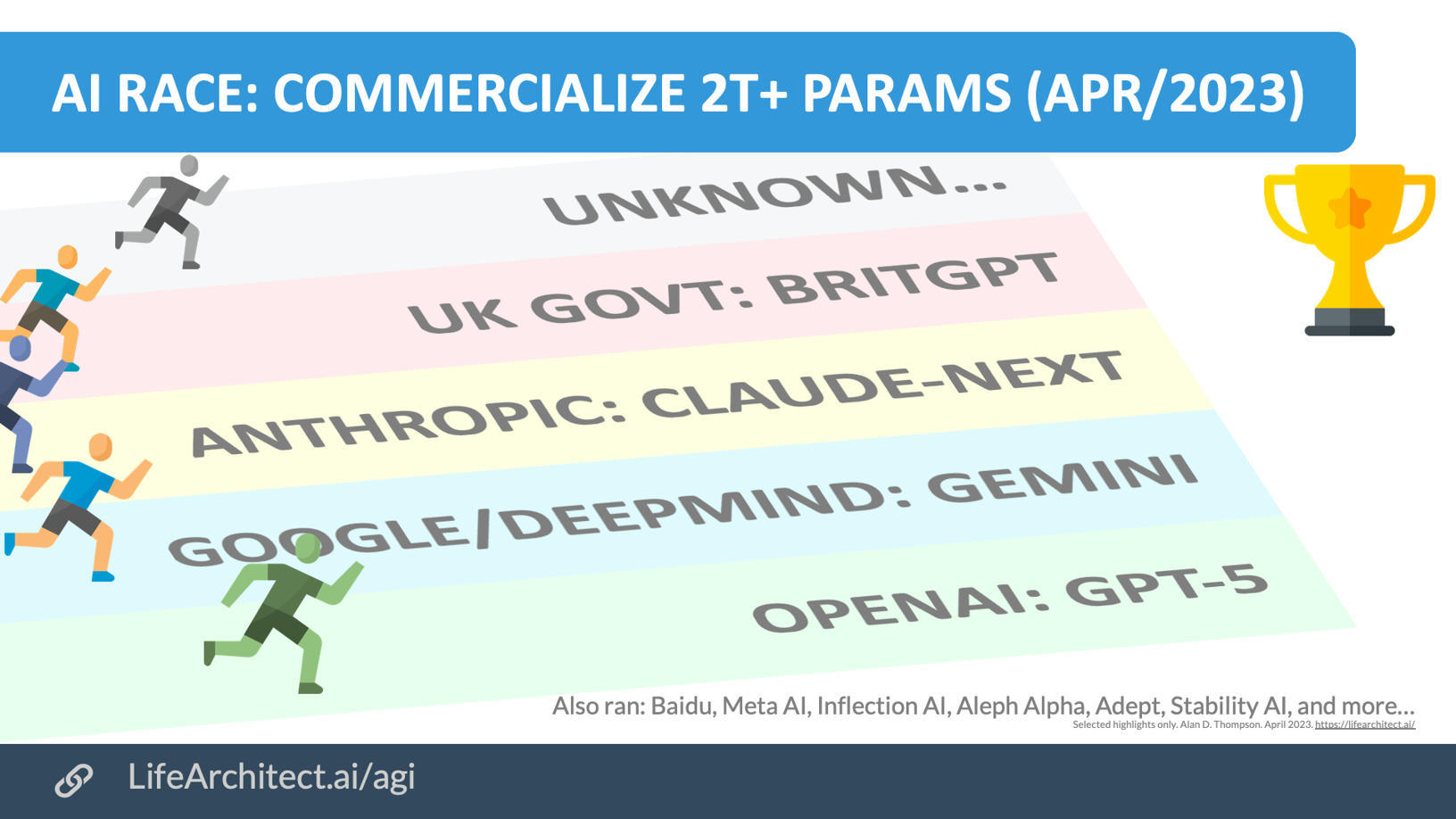

Google DeepMind Gemini

Snapchat My AI

GPT-5

Baidu ERNIE

GPT-4

Fudan MOSS

Google Bard

The GPT-3 Family: 50+ Models

Microsoft Bing Chat (Sydney)

Anthropic RL-CAI 52B

ChatGPT

DeepMind Sparrow

Chinchilla scaling laws

Megatron

Google Pathways

AI overview

The Gap: Waiting for the AI time lag

The AI Alignment Problem

AI: The Great Flood

GPT-3.5 and Raven’s

Talk to GPT

Large language models

AI report card

AI + IQ testing

Life-changing AI

Books written by AI

AI art

AI + the human brain

AI + BMIs

Synthesia

Replika

Learn more about AI

AI video

Una AI

Leta AI

GPT-3 vs IBM Watson

Aurora AI

Zhibing Hua AI (China)

AI media

Alan talks to ABC

AI is outperforming humans

AI fire alarm

AI sound bites

AI theory

The Who Moved My Cheese? AI awards!

Dr Ilya Sutskever

Dr Ray Kurzweil

Mocking AI panic

Arguments about AI

Why does AI make you so angry?

AI + economics

AI + ethics

AI + prompt crafting

AI + spirituality

AI timeline

AI papers

EleutherAI

Connor Leahy

Quotes about AI

Marvin Minsky

AI definitions

Get The Memo (w/ MIT, Meta, IBM, Tesla)

Read the full article at: lifearchitect.ai

Fake virtual identities are nothing new. The ability to so easily create them has been both a boon for social media platforms — more “users” — and a scourge, tied as they are to the spread of conspiracy theories, distorted discourse and other societal ills.

Still, Twitter bots are nothing compared with what the world is about to experience, as any time spent with ChatGPT illustrates. Flash forward a few years and it will be impossible to know if someone is communicating with another mortal or a neural network.

Sam Altman knows this. Altman is the co-founder and the CEO of ChatGPT parent OpenAI and has long had more visibility than most into what’s around the corner. It’s why more than three years ago, he conceived of a new company that could serve first and foremost as proof-of-personhood. Called Worldcoin, its three-part mission — to create a global ID, a global currency and an app that enables payment, purchases and transfers using its own token, along with other digital assets and traditional currencies — is as ambitious as it is technically complicated, but the opportunity is also vast.

In broad strokes, here’s how the outfit, still in beta and based in San Francisco and Berlin, works: To use the service, users must download its app, then have their iris scanned using a silver, melon-sized orb that houses a custom optical system. Once the scan is complete, the individual is added to a database of verified humans, and Worldcoin creates a unique cryptographic “hash” or equation that’s tied to that real person. The scan isn’t saved, but the hash can be used in the future to prove the person’s identity anonymously through the app, which includes a private key that links to a shareable public key. Because the system is designed to verify that a person is actually a unique individual, if the person wants to accept a payment or fund a specific project, the app generates a “zero-knowledge proof” — or mathematical equation — that allows the individual to provide only the necessary amount of information to a third party. Some day, the technology might even help people to vote on how AI should be governed. (A piece in the outlet IEEE Spectrum better spells out the specifics of Worldcoin’s tech.)

Investors eager to be in business with Altman jumped at the chance to fund the outfit almost as soon as it was imagined, with Andreessen Horowitz, Variant, Khosla Ventures, Coinbase and Tiger Global providing it with $125.5 million. But the public has been more wary. When in June 2021, Bloomberg reported that Altman was at work on Worldcoin, many questioned its promise to give one share of its new digital currency to everyone who agreed to an iris scan. Worldcoin said it needed to be decentralized from the outset so it could deliver future currency drops as part of universal basic income programs. (Altman has long predicted that AI will generate enough wealth to pay every adult some amount of money each year.) From Worldcoin’s perspective, the crypto piece was necessary. Yet some quickly deemed it another crypto scam, while others questioned whether a nascent startup collecting biometric data could truly secure its participants’ privacy.

Altman later said the press owed to a “leak” and that Worldcoin wasn’t ready to tell its story in 2021. Now, reorganized under a new parent organization called Tools for Humanity that calls itself both a research lab and product company, the outfit is sharing more details. Whether they’ll be enough to win over users is an open question, but certainly, more people now understand why proving personhood online is about to become essential.

Read the full article at: techcrunch.com