An autonomous drone has competed against human drone-racing champions — and won. The victory can be attributed to savvy engineering and a type of artificial intelligence that learns mostly through trial and error.

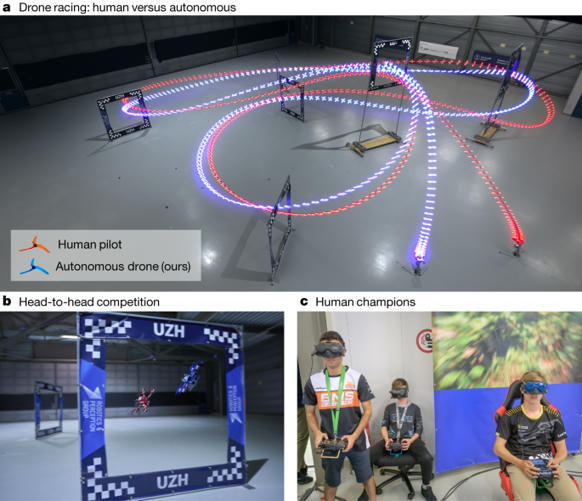

First-person view (FPV) drone racing is a televised sport in which professional competitors pilot high-speed aircraft through a 3D circuit. Each pilot sees the environment from the perspective of their drone by means of video streamed from an onboard camera. Reaching the level of professional pilots with an autonomous drone is challenging because the robot needs to fly at its physical limits while estimating its speed and location in the circuit exclusively from onboard sensors.

Here the authors of this paper introduce Swift, an autonomous system that can race physical vehicles at the level of the human world champions. The system combines deep reinforcement learning (RL) in simulation with data collected in the physical world. Swift competed against three human champions, including the world champions of two international leagues, in real-world head-to-head races. Swift won several races against each of the human champions and demonstrated the fastest recorded race time.

This work represents a milestone for mobile robotics and machine intelligence, which may inspire the deployment of hybrid learning-based solutions in other physical systems. An autonomous system is described that combines deep reinforcement learning with onboard sensors collecting data from the physical world, enabling it to fly faster than human world champion drone pilots around a race track.

Read the full article at: www.nature.com